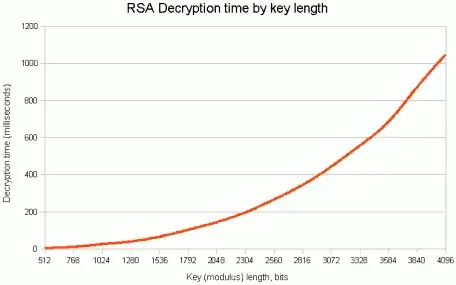

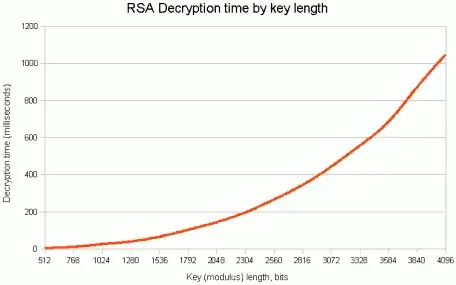

Each bit of a key increases the difficulty of a brute-force attack exponentially but there is a trade-off. Adding more bits to the key will negatively effect the speed of encryption/decryption. The actual amount of this speed loss depends on the algorithm, for example in RSA (in theory) for a n-bit key, computational effort for encryption is proportional to n^2, while effort for decryption is proportional to n^3, so doubling the key in RSA means that encryption will be four times slower, and decryption will be (almost) eight times slower.

Another thing I think is worth mentioning and thanks for Perseids for pointing it out.

There is a limit where more time needed to break the algorithm becomes meaningless, because for practical purposes it is already "forever". As a "real world" analogy imagine you are packing rations for a short trip in the desert and are deciding how much water you want to take with you. For a small amount of water "more is better" is a reasonable approach, because you might need to stay longer than planned or spill some water. But when you already take the whole Amazon with you then increasing that amount to all the water on the planet does not help. That are the dimensions we are talking about when someone tries to convince you to use 8192 bit RSA.

(Diagram from Javamex)

For elliptic curve algorithms, we assume that finding the discrete logarithm of a random elliptic curve element with respect to a publicly known base point is infeasible. The primary benefit of ECC is in fact the smaller key size e.g., a 256-bit ECC public key should provide comparable security to a 3072-bit RSA public key. Based on the fact that the main algorithms used to solve ECDLP (e.g baby-step giant-step) need n^(1/2) steps, increase/decrease in n-bits will affect de/encrption speeds.

As for your side question, I wouldn't worry too much but considering you are talking about length, "longer" is preferred.