As pointed by schroeder, there are many moving parts here, thus many instances where same arguments can be positive or negative.

Here's my argument for one case.

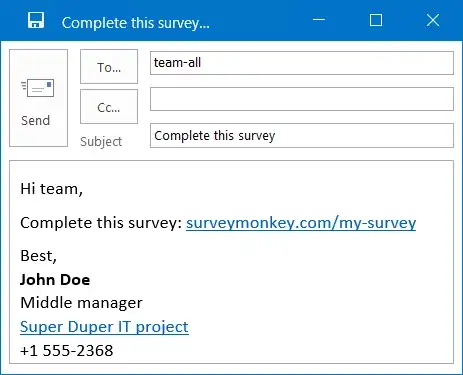

Let the case be: employees of this org share pretty often links via email. Consequently, employees often click and visit links from emails.

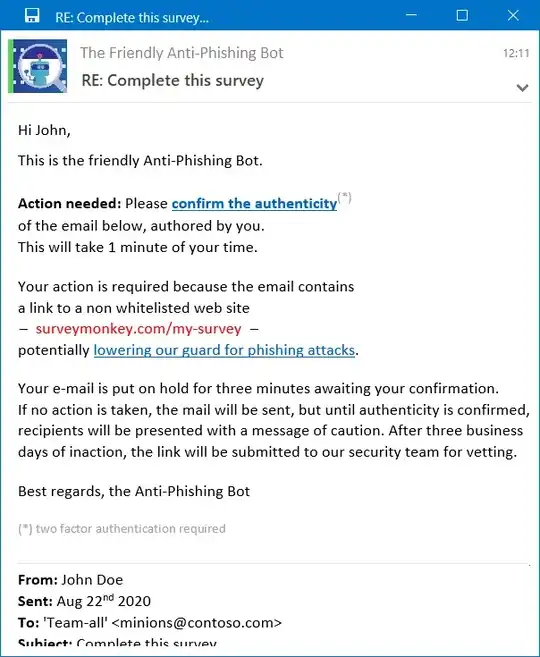

From the sender's point of view: after several such messages the sender can likely develop a pattern whether it is better (faster) for her to let it be or "confirm authenticity". In the best case she can "confirm authenticity" every time using a mental shortcut if this is possible. That is, a no-brainer to make her request acceptable asap. Let's suppose she has to "confirm authenticity" by filling a one-line form with her judgement of authenticity. Having worked in a SOC and completing many tickets, my colleagues and I developed standard "fillings" to copy-paste without thinking, because thinking is expensive.

This sender scenario looks okay from a security POV and okay-ish from a usability POV to me.

The worst case sender scenario is that she will ignore the confirmation and let the email be sent with the warning message.

This sender scenario looks the same from the security POV and little worse from the usability POV (receiving bot emails).

Let's look at the receivers' scenario.

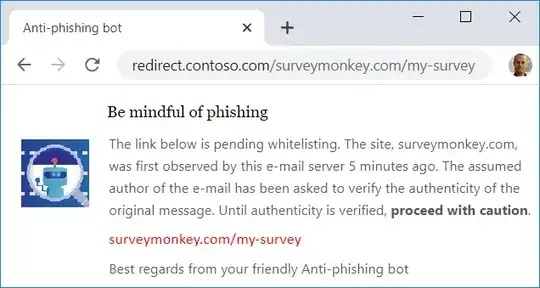

Receivers are used to often click and visit shared links via email.

In the best case scenario, the links are vetted and authenticated.

This seems ok. However, a question arises on how they perceive this mechanism (if they know about it). Usually, the organizational infrastructure is perceived as secure, so they mainly feel safe on clicking links at work. If they know this mechanism is in place, this may reinforce this perception. This is not great if they whole system will not work in the end. But it is neutral in case it works IMO.

In the worst case receiver scenario, all (many) links were flagged as potentially dangerous causing the warning to be displayed all (many) times. This is bad.

It is bad, because the habituation to repeated and ill-perceived warnings is notoriously a big problem in human computer interaction and still not solved, as reviewed here,here. This means that the receivers are likely to get used to ignore the message, they may literally become blind to it (cognitively speaking). Thus invalidating the whole system. This is bad from both security and usability POVs.

So, we have covered the extremes of one possible case: the case of the org with lots of link sharing.

The positive extreme is where senders always "confirm authenticity" and receivers do not perceive an "improved security". There is some burden on the sender's side from the usability point of view. No burden for receivers, though. On the security side, external/untrusted links are going to be flagged. Depending on the ratio of # of rusted/un-trusted shared links, e.g. trusted >> un-trusted, this may result in a positive outcome for security.

The negative extreme is where senders never "confirm authenticity" and receivers (almost certainly) will ignore the warning message. It follows that the same "security level" is kept, but usability is worsen on both sender and (even more) receiver.

Of course there is a continuum between the extremes, and if that continuum is measurable (e.g. how many trusted/un-trusted links are sent in time), chances are that a data-driven decision can be made. If not, maybe a testing period with control groups may be relevant to measure that.