The deduction I made off this is that after a certain key size, increase in a single bit causes Time required for search to get doubled from the previous one.

Not just after a certain key size, that's how it works in general. Assuming a random key, if it's 8 bits there will be 28 possible keys, and it will take about 27 = 128 tries on average to guess it (on average you'll guess it after searching about half the keyspace). Each additional bit in the key doubles the keyspace, and thus doubles the expected time to guess the correct key.

For Instance If My password is of 8 length and its character set is lowercase characters, then total attempts required to crack my password will be 26^8 = 208827064576 (character_set ^ keysize) (on the worst case).

So I was wondering, whether the increase in Key_Size by bits, and this character set theory is somewhat related or not.

Yes, this is the same idea. If you break a key into bytes (sets of 8 bits), then each byte added multiplies the keyspace by 28 = 256. For random lowercase passwords, each character added multiplies the password space by 26 (or about 24.7).

If yes, then why most people measure strength of key by the number of bits it has, rather then the character set it uses?

Because keys aren't related to character sets. Keys are random binary. You can represent them as 0s and 1s, or as hexadecimal, or any number of other ways, but what matters is the keyspace, which is directly related to the key length in bits.

And what is the most common character set used in password/key cracking, as most character tables (ASCII/UTF8) contains a lot of NPC's and/or Emoji's etc. so are they excluded during the key search process or not?

That depends on the password cracking tool and configuration being used, though printable ASCII is common given that most passwords are ASCII. Many password cracking tools also prioritize common patterns and mutations of previously cracked passwords. Passwords are generally crackable only because they are chosen poorly.

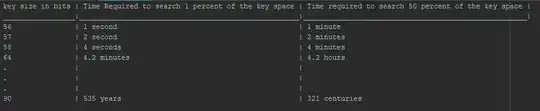

Keys generally aren't attacked directly, as 128 bit keys are impossible to brute-force. An old 64 bit key might be brute-forced directly, in which case there's not really a character set used per se, it's just iterating all possible sequences of 64 bits.