No, Docker containers are not more secure than a VM.

Quoting Daniel Shapira:

In 2017 alone, 434 linux kernel exploits were found, and as you have seen in this post, kernel exploits can be devastating for containerized environments. This is because containers share the same kernel as the host, thus trusting the built-in protection mechanisms alone isn’t sufficient.

1. Kernel exploits from a container

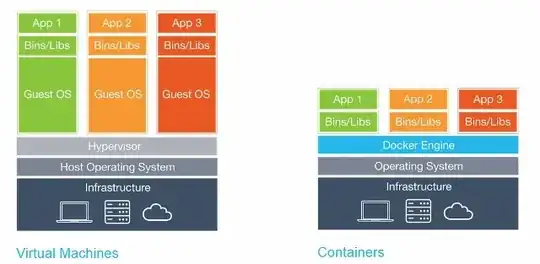

If someone exploits a kernel bug inside a container, they exploited it on the host OS. If this exploit allows for code execution, it will be executed on the host OS, not inside the container.

If this exploit allows for arbitrary memory access, the attacker can change or read any data for any other container.

On a VM, the process is longer: the attacker would have to exploit both the VM kernel, the hypervisor, and the host kernel (and this may not be the same as the VM kernel).

2. Resource starvation

As all the containers share the same kernel and the same resources, if the access to some resource is not constrained, one container can use it all up and starve the host OS and the other containers.

On a VM, the resources are defined by the hypervisor, so no VM can deny the host OS from any resource, as the hypervisor itself can be configured to make restricted use of resources.

3. Container breakout

If any user inside a container is able to escape the container using some exploit or misconfiguration, they will have access to all containers running on the host. That happens because the same user running the docker engine is the user running the containers. If any exploit executes code on the host, it will execute under the privileges of the docker engine, so it can access any container.

4. Data separation

On a docker container, there're some resources that are not namespaced:

- SELinux

- Cgroups

- file systems under

/sys, /proc/sys,

/proc/sysrq-trigger, /proc/irq, /proc/bus/dev/mem, /dev/sd* file system - Kernel Modules

If any attacker can exploit any of those elements, they will own the host OS.

A VM OS will not have direct access to any of those elements. It will talk to the hypervisor, and the hypervisor will make the appropriate system calls to the host OS. It will filter out invalid calls, adding a layer of security.

5. Raw Sockets

The default Docker Unix socket (/var/run/docker.sock) can be mounted by any container if not properly secured. If some container mounts this socket, it can shutdown, start or create new images.

If it's properly configured and secured, you can achieve a high level of security with a docker container, but it will be less than a properly configured VM. No matter how much hardening tools are employed, a VM will always be more secure. Bare metal isolation is even more secure than a VM. Some bare metal implementations (IBM PR/SM for example) can guarantee that the partitions are as separated as if they were on separate hardware. As far as I know, there's no way to escape a PR/SM virtualization.