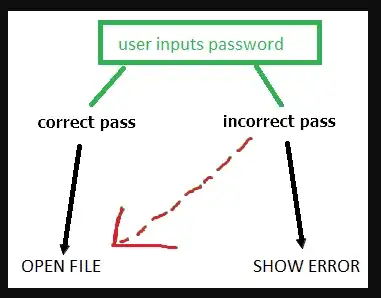

Sure, software can do whatever you program it to do.

As a trivial example, if I was provided a Python program that checked for a password:

password = raw_input('Enter your password: ')

if password != 'oh-so-secret':

sys.exit(1)

do_secure_thing()

I could quite simply change it to not care what the password is:

password = raw_input('Enter your password: ')

do_secure_thing()

(In this case, you could also just see what the hard-coded plaintext password is and enter it.)

With binary applications, you have to decompile them before you can modify the source, but there are plenty of decompilers for common languages.

This is why code signing exists.

Now, if this isn't your own system, your options may be a bit more limited. On most Unix-like systems, executables are generally stored with read- and execute-only permissions for non-root users; thus, if you aren't root, you can't modify the target executable. There are other less direct methods to try, but if those fail you're looking at moving to a different vector.

For instance, a keylogger will record the passwords that the user enters, allowing you to reuse them on your own later.

Another method of attack that doesn't require modifying a program's source is to modify the dynamic library load path such that the program uses a library call that you have written, rather than the one it expects. If they use an external, dynamically-loaded library for password management, and that library has a function verify_password() that returns a boolean, you can write your own verify_password() that always returns true, and load that in instead.

The real distinction that changes the answer from "yes" to "no" is if the password isn't actually a password, but an encryption key. If the data is encrypted, then it doesn't matter what any outside programs do - the data it still encrypted until the decryption algorithm is fed the proper key.